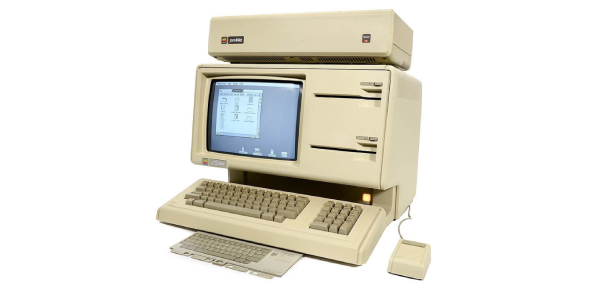

The Story of Computer: Long journey, invention, and innovation

The story of computers is a fascinating journey that spans centuries and involves numerous inventions, innovations, and pioneers. Here's a condensed version of the story: Ancient Calculation Devices: The concept…