The story of computers is a fascinating journey that spans centuries and involves numerous inventions, innovations, and pioneers. Here’s a condensed version of the story:

- Ancient Calculation Devices: The concept of computational devices dates back to ancient civilizations. The abacus, developed around 2700 BCE, was one of the earliest known tools for performing calculations.

- Mechanical Calculators: In the 17th century, inventors like Blaise Pascal and Gottfried Wilhelm Leibniz created mechanical calculators that could perform arithmetic operations. These devices laid the groundwork for more complex machines.

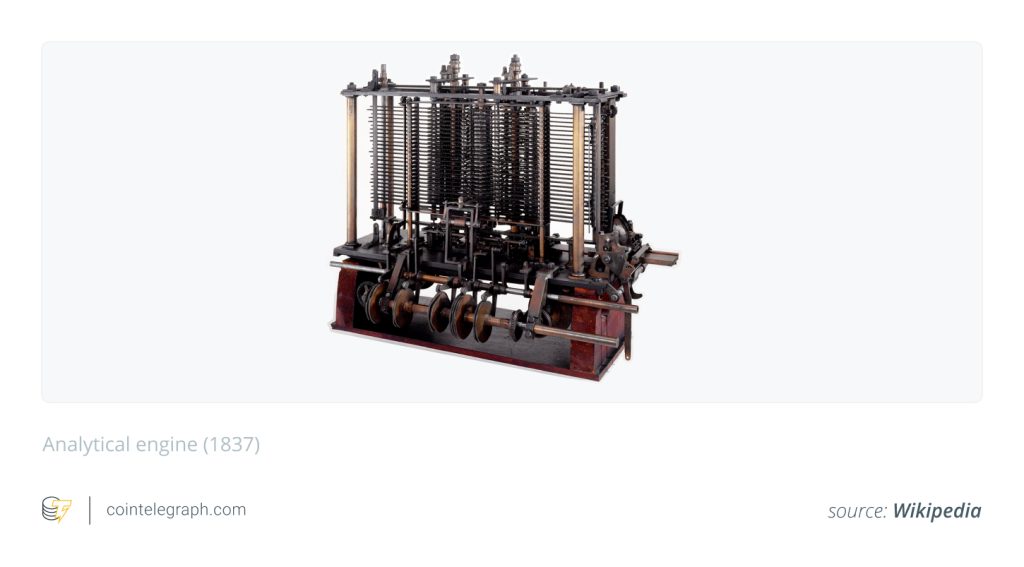

- Charles Babbage and Analytical Engine (1837): Charles Babbage, an English mathematician and inventor, conceptualized the “Analytical Engine,” a mechanical device capable of performing various calculations. Although never fully built during his lifetime, it is considered the precursor to modern computers.

- Ada Lovelace (1815-1852): Ada Lovelace, a mathematician, is often credited with writing the world’s first computer program for Babbage’s Analytical Engine. Her work laid the foundation for programming concepts.

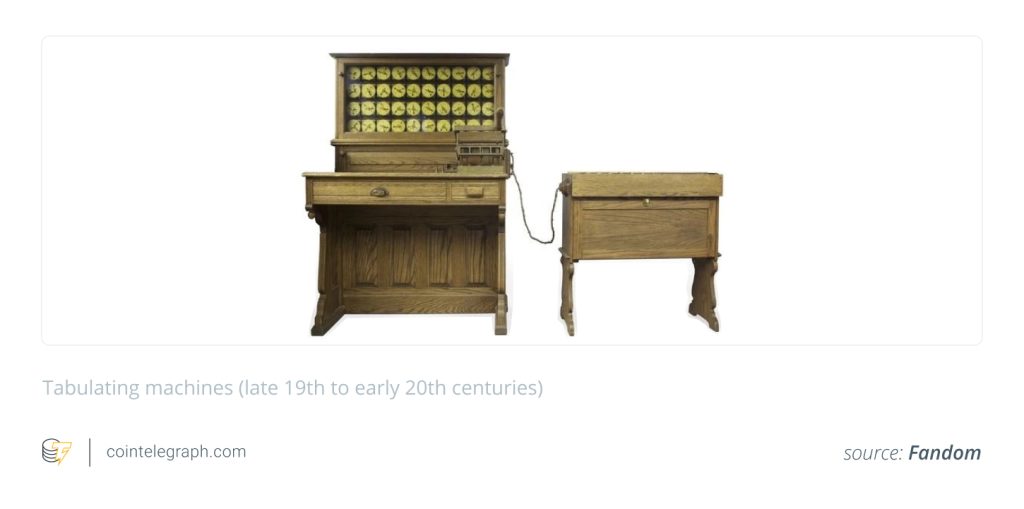

- Hollerith’s Tabulating Machine (1884): Herman Hollerith’s tabulating machine used punch cards to process and analyze data, particularly for the 1890 U.S. Census. This marked an important step in data processing.

- The Turing Machine (1936): Alan Turing’s theoretical concept of the Turing Machine provided a formal framework for understanding computation. It is a crucial concept in the development of theoretical computer science.

- ENIAC (1945): The Electronic Numerical Integrator and Computer (ENIAC) is considered one of the earliest general-purpose electronic computers. It was used for scientific and military calculations.

- UNIVAC I (1951): The Universal Automatic Computer (UNIVAC I) was the first commercially produced computer and introduced the concept of storing programs in memory.

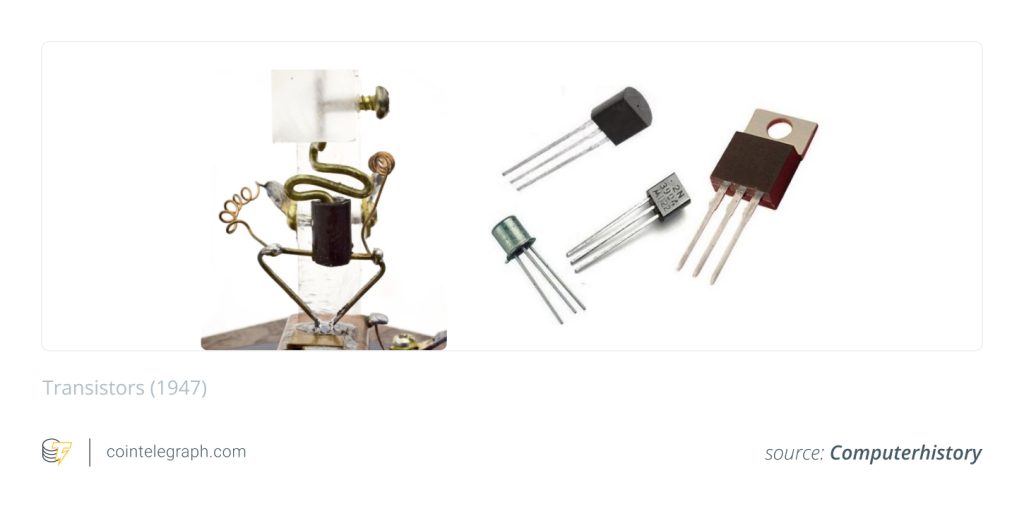

- Transistors and Integrated Circuits: The invention of transistors and integrated circuits in the late 1940s and 1950s revolutionized computing by making devices smaller, more reliable, and efficient.

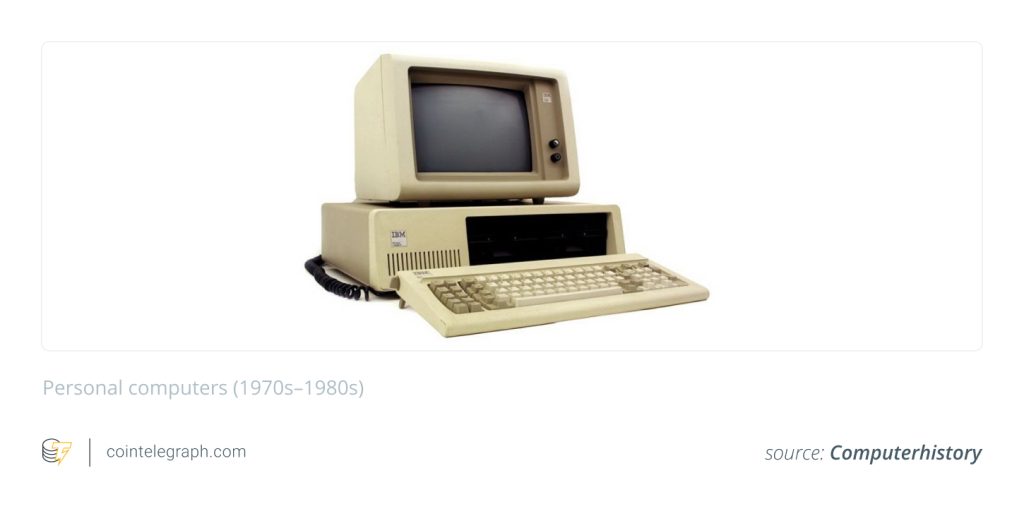

- Mainframes, Minicomputers, and Microcomputers: In the 1960s and 1970s, mainframe computers dominated large-scale computing, while minicomputers provided more affordable options. The 1970s also saw the rise of microcomputers, or personal computers, with the introduction of the Altair 8800 and Apple I.

- The Personal Computer Revolution: The 1980s saw the explosion of the personal computer market with IBM’s PC and the rise of Microsoft’s operating system. Graphical User Interfaces (GUIs) became popular with the release of the Apple Macintosh.

- Internet and World Wide Web (1990s): Tim Berners-Lee’s invention of the World Wide Web and the subsequent growth of the internet transformed communication, information sharing, and business.

- Mobile Computing and Smartphones (2000s): Mobile devices, particularly smartphones, became increasingly powerful and connected, changing how people interacted with technology and each other.

- Cloud Computing and AI: The 2010s witnessed the proliferation of cloud computing, allowing remote storage and processing, as well as significant advancements in artificial intelligence and machine learning.

- Quantum Computing: In recent years, quantum computing has emerged as a cutting-edge field with the potential to solve complex problems much faster than classical computers.

The story of computers continues to evolve, with ongoing developments in fields like quantum computing, artificial intelligence, and more. The journey from ancient calculation devices to today’s powerful and interconnected devices is a testament to human ingenuity and innovation.